NewTek has had a telestration application, which allowed you to draw over an NDI video source, and output the annotations as a separate NDI source. Unfortunately it was discontinued a while back, however the need for something like it did not go away.

In the church tech scene, ProPresenter 6 had a Telestrator feature - although the application itself was quite sluggish (and also I had never used Pro6’s Telestrator before either). With online whiteboard teaching being a common thing nowadays (given COVID-19), I wanted to find something that could replicate such telestration feature.

Safe to say I didn’t find one.

So as per the usual gist of my blog posts - I made my own.

This was a fun project to set out on, as it would the second time I’ve used the NDI SDK (the first being making NDI Streamer). Also it had been a while since I’d created anything with .NET or C#.

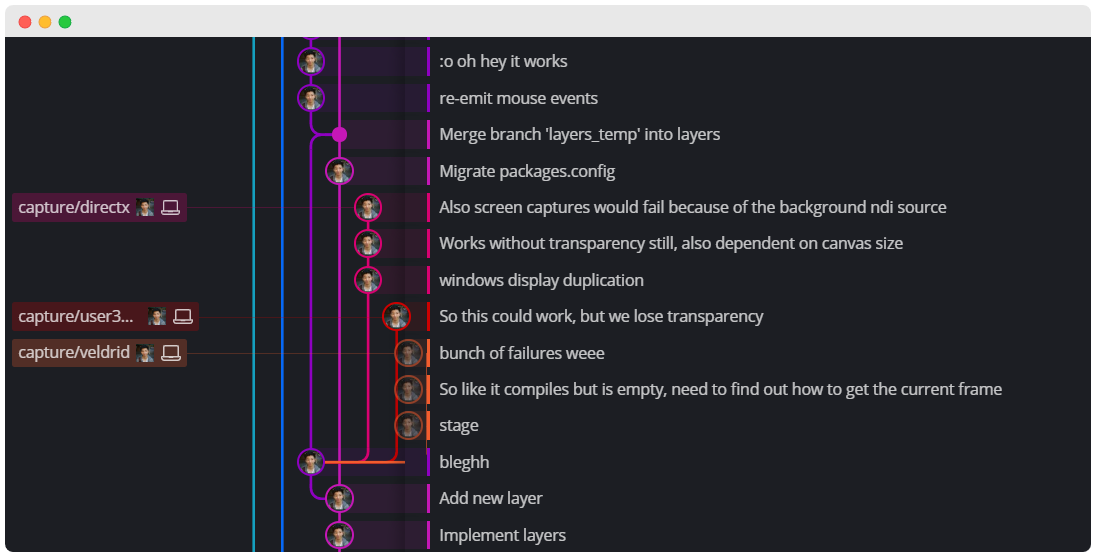

The project itself was a fork of NDI Whiteboard by a dude named Gerard, however the original version had basic functionality - But a good starting platform for me!

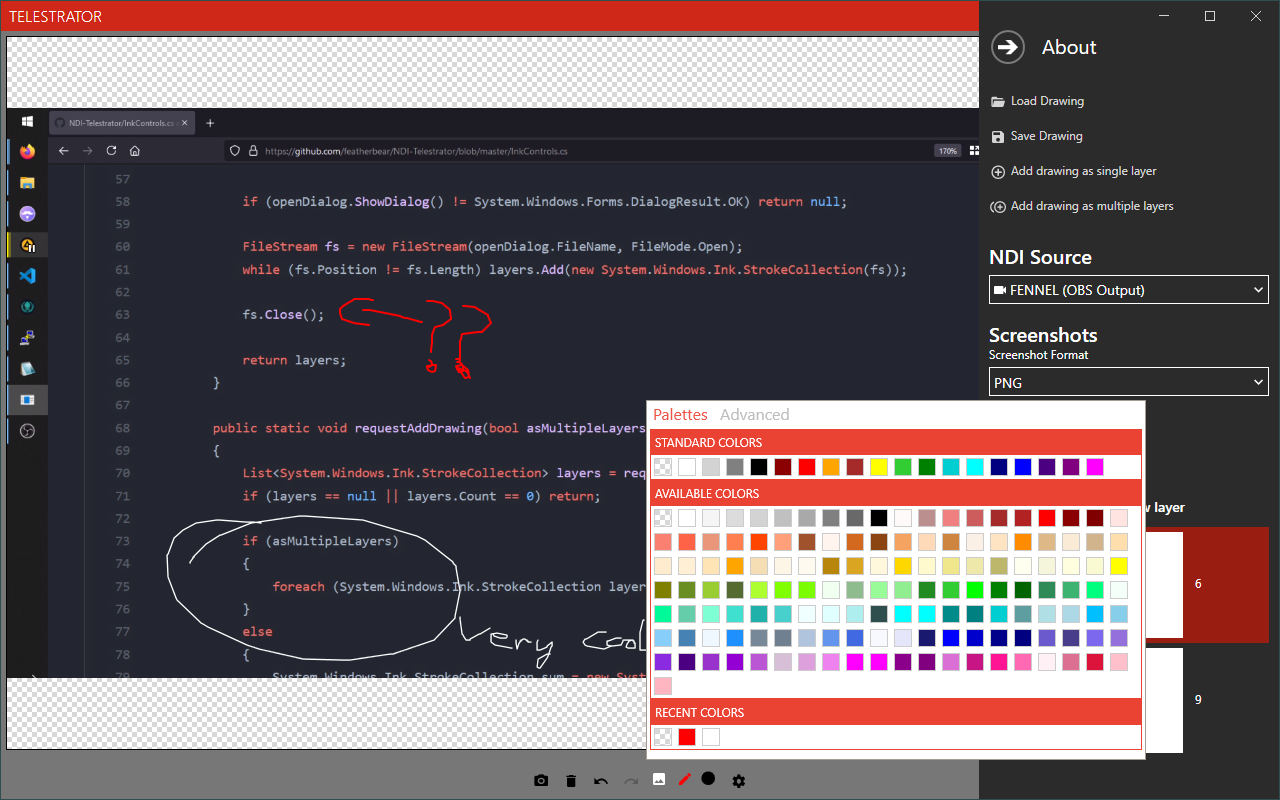

I implemented extra features which enabled me to turn this “Whiteboard” into an overlay screen.

- Multiple ink layers

- Screenshots

- Undo / Redo

- Save / Load / Import

- (More) Efficient CPU / Network utilisation

- NDI background source (so I can see what I’m annotating on)

- Better colour / background / thickness selection

By far, the hardest thing of this project was efficiently capturing the ink canvas to be sent over NDI. I got into many rabbit holes of trying to find an effective way to do it - Hooking into OpenGL, DirectX, the user32 low level library, and some other packages; however my lack of graphics processing knowledge prevented me from getting too far.

Some partial success were made, that were performant and fast - however I lost the alpha / transparency capability - which was sorta a dealbreaker as I did not want to transmit a chroma background which would have to be keyed out.

Also, given the nature of the NDI WPF SDK, the NDI video frames were being sent in the same thread as the program, which severely slowed down the program when frames were being transmitted.

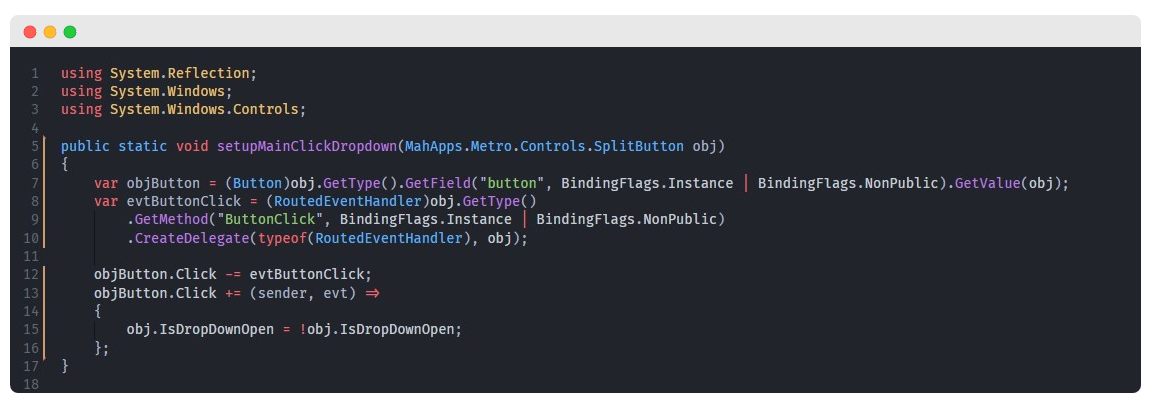

I eventually settled on some very hacky code that gave me relatively decent performance. Prior to this hack, active stylus inputs would not be transmitted over NDI until the stroke was complete - supposedly because of some gesture recognition thing going on. This would lower the viewer’s user experience, as they would not be able to see strokes that are currently being drawn, rather only see the end result.

My solution was to capture the stylus inputs, cancel their events, and fire a mouse movement event instead. After much effort - this seemed to work. I’m not too proud of it but I am happy that I reached a working and usable solution.

I’ve tried this application out once during one of the classes that I teach at uni, and it seems to work pretty well - I just need to remind myself to clear the annotations when I’m done explaining something haha :P